This essay was originally published in the Current Contents print editions June 20, 1994, when Clarivate Analytics was known as The Institute for Scientific Information (ISI).

Librarians and information scientists have been evaluating journals for at least 75 years. Gross and Gross conducted a classic study of citation patterns in the ’20s.1 Others, including Estelle Brodman with her studies in the ’40s of physiology journals and subsequent reviews of the process, followed this lead.2 However, the advent of the Clarivate Analytics citation indexes made it possible to do computer-compiled statistical reports not only on the output of journals but also in terms of citation frequency. And in the ’60s we invented the journal “impact factor.” After using journal statistical data in-house to compile the Science Citation Index (SCI) for many years, Clarivate Analytics began to publish Journal Citation Reports (JCR)3 in 1975 as part of the SCI and the Social Sciences Citation Index (SSCI).

Informed and careful use of these impact data is essential. Users may be tempted to jump to ill-formed conclusions based on impact factor statistics unless several caveats are considered.

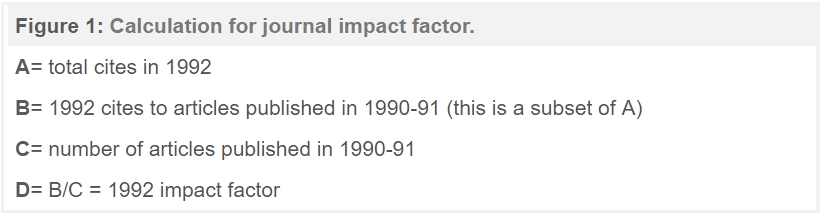

The JCR provides quantitative tools for ranking, evaluating, categorizing, and comparing journals. The impact factor is one of these; it is a measure of the frequency with which the “average article” in a journal has been cited in a particular year or period. The annual JCR impact factor is a ratio between citations and recent citable items published. Thus, the impact factor of a journal is calculated by dividing the number of current year citations to the source items published in that journal during the previous two years (see Figure 1).

The impact factor is useful in clarifying the significance of absolute (or total) citation frequencies. It eliminates some of the bias of such counts which favor large journals over small ones, or frequently issued journals over less frequently issued ones, and of older journals over newer ones. Particularly in the latter case such journals have a larger citable body of literature than smaller or younger journals. All things being equal, the larger the number of previously published articles, the more often a journal will be cited. 4, 5

There have been many innovative applications of journal impact factors. The most common involve market research for publishers and others. But, primarily, JCR provides librarians and researchers with a tool for the management of library journal collections. In market research, the impact factor provides quantitative evidence for editors and publishers for positioning their journals in relation to the competition—especially others in the same subject category, in a vertical rather than a horizontal or intradisciplinary comparison. JCR data may also serve advertisers interested in evaluating the potential of a specific journal.

Perhaps the most important and recent use of impact is in the process of academic evaluation. The impact factor can be used to provide a gross approximation of the prestige of journals in which individuals have been published. This is best done in conjunction with other considerations such as peer review, productivity, and subject specialty citation rates. As a tool for management of library journal collections, the impact factor supplies the library administrator with information about journals already in the collection and journals under consideration for acquisition. These data must also be combined with cost and circulation data to make rational decisions about purchases of journals.

The impact factor can be useful in all of these applications, provided the data are used sensibly. It is important to note that subjective methods can be used in evaluating journals as, for example, by interviews or questionnaires. In general, there is good agreement on the relative value of journals in the appropriate categories. However, the JCR makes possible the realization that many journals do not fit easily into established categories. Often, the only differentiation possible between two or three small journals of average impact is price or subjective judgments such as peer review.

Clarivate Analytics does not depend on the impact factor alone in assessing the usefulness of a journal, and neither should anyone else. The impact factor should not be used without careful attention to the many phenomena that influence citation rates, as for example the average number of references cited in the average article. The impact factor should be used with informed peer review. In the case of academic evaluation for tenure it is sometimes inappropriate to use the impact of the source journal to estimate the expected frequency of a recently published article. Again, the impact factor should be used with informed peer review. Citation frequencies for individual articles are quite varied.

There are many artifacts that can influence a journal’s impact and its ranking in journal lists, not the least of which is the inclusion of review articles or letters. This is illustrated in a study of the leading medical journals published in the Annals of Internal Medicine.6

Review articles generally are cited more frequently than typical research articles because they often serve as surrogates for earlier literature, especially in journals that discourage extensive bibliographies. In the JCR system any article containing more than 100 references is coded as a review. Articles in “review” sections of research or clinical journals are also coded as reviews, as are articles whose titles contain the word “review” or “overview.”

The Source Data Listing in the JCR not only provides data on the number of reviews in each journal but also provides the average number of references cited in that journal’s articles. Naturally, review journals have some of the highest impact factors. Often, the first-ranked journal in the subject category listings will be a review journal. For example, under Biochemistry, the journal topping the list is Annual Review of Biochemistry with an impact factor of 35.5 in 1992.

It is widely believed that methods articles attract more citations than other types of articles. However, this is not in fact true. Many journals devoted entirely to methods do not achieve unusual impact. But it is true that among the most cited articles in the literature there are some super classics that give this overall impression. It should be noted that the chronological limitation on the impact calculation eliminates the bias super classics might introduce. Absolute citation frequencies are biased in this way, but, on occasion, a hot paper might affect the current impact of a journal.

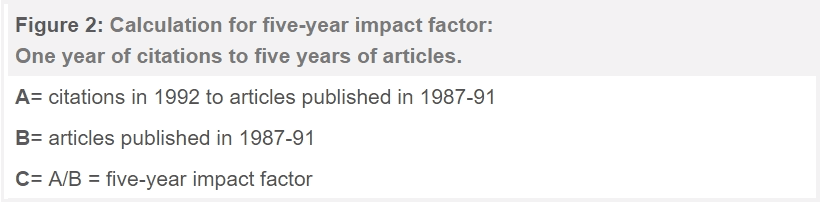

Different specialties exhibit different ranges of peak impact. That is why the JCR provides subject category listings. In this way, journals may be viewed in the context of their specific field. Still, a five-year impact may be more useful to some users and can be calculated by combining the statistical data available from consecutive years of the JCR (see Figure 2). It is rare to find that the ranking of a journal will change significantly within its designated category unless the journal’s influence has indeed changed.

An alternative five-year impact can be calculated based on adding citations in 1988-92 articles published in the same five-year period. And yet another is possible by selecting one or two earlier years as factor “B” above.

While Clarivate Analytics does manually code each published source item, it is not feasible to code individually the 12 million references we process each year. Therefore, journal citation counts in JCR do not distinguish between letters, reviews, or original research. So, if a journal publishes a large number of letters, there will usually be a temporary increase in references to those letters. Letters to the Lancet may indeed be cited more often that letters to JAMA or vice versa, but the overall citation count recorded would not take this artifact into account. Detailed computerized article-by-article analyses or audits can be conducted to identify such artifacts.

Some of the journals listed in the JCR are not citing journals, but are cited-only journals. This is significant when comparing journals by impact factor because the self-citations from a cited-only journal are not included in its impact factor calculation. Self-citations often represent about 13% of the citations that a journal receives. The cited-only journals with impact factors in the JCR Journal Rankings and Subject Category Listing may be ceased or suspended journals, superseded titles, or journals that are covered in the science editions of Current Contents, but not a citation index.

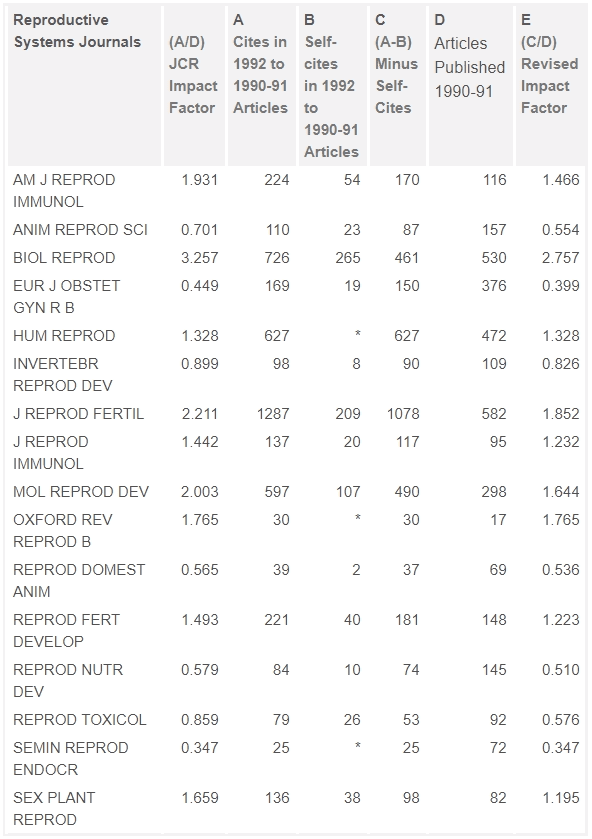

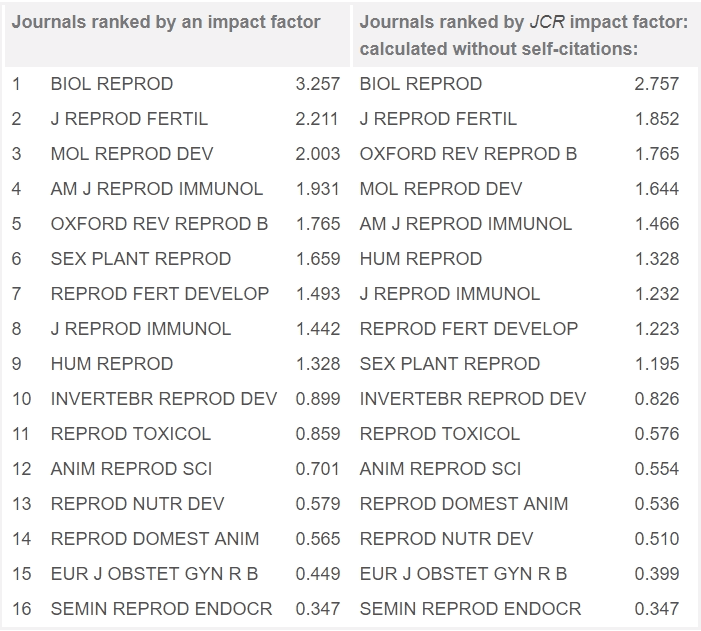

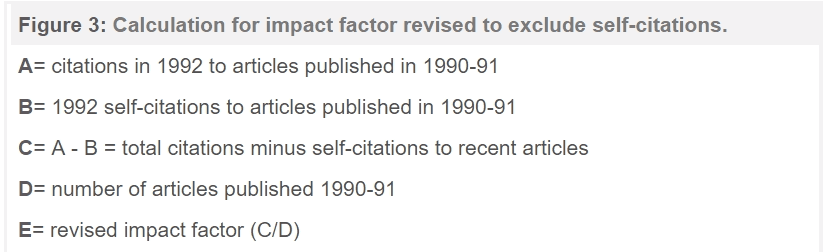

Users can identify cited-only journals by checking the JCR Citing Journal Listing. Furthermore, users can establish analogous impact factors, (excluding self-citations), for the journals they are evaluating using the data given in the Citing Journal Listing (see Figure 3).

(see Table 1 for numerical example)

A user’s knowledge of the content and history of the journal studied is very important for appropriate interpretation of impact factors. Situations such as those mentioned above and others such as title change are very important, and often misunderstood, considerations.

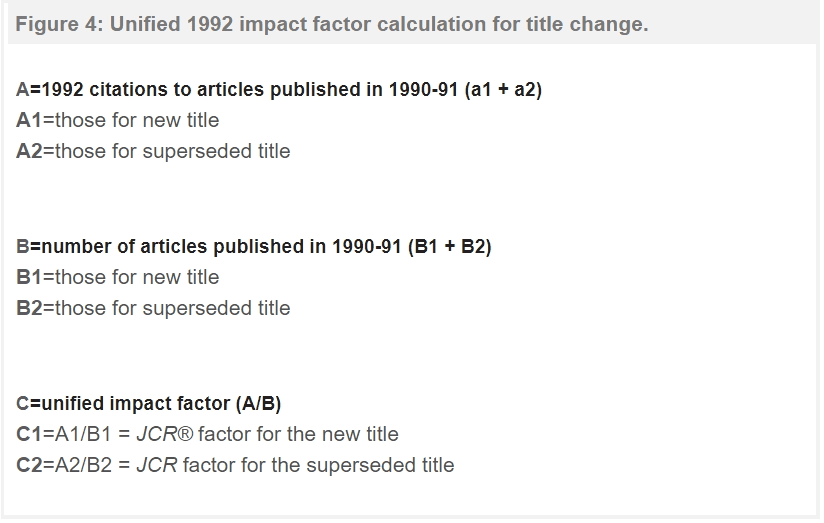

A title change affects the impact factor for two years after the change is made. The old and new titles are not unified unless the titles are in the same position alphabetically. In the first year after the title change, the impact is not available for the new title unless the data for old and new can be unified. In the second year, the impact factor is split. The new title may rank lower than expected and the old title may rank higher than expected because only one year of source data is included in its calculation (see Figure 4). Title changes for the current year and the previous year are listed in the JCR guide.

The impact factor is a very useful tool for evaluation of journals, but it must be used discreetly. Considerations include the amount of review or other types of material published in a journal, variations between disciplines, and item-by-item impact. The journal’s status in regard to coverage in the Clarivate Analytics databases as well as the occurrence of a title change are also very important. In the next essay we will look at some examples of how to put tools for journal evaluation into use.

Dr. Eugene Garfield

Founder and Chairman Emeritus, ISI